Introduction:

A web server is server software that can satisfy world wide web client requests. It processes incoming network requests over HTTP and several other protocols.

A user agent normally a web browser or a web crawler initiates the communication by making a request for specific resource using HTTP and the server responded with the content or an error message. The resource is mostly a real file on the server’s storage. But it depends on the case and how the web server is implemented.

Path transition:

Web servers are able to map the path component of a URL (uniform resource locator) into:

A local file system resource.

An internal or external program.

Kernel-mode and user-mode web servers:

A web server can be integrated with the OS kernel or in the user space. Web server that integrated with the user space have to ask the system for permission to use more memory or CPU resources. It takes time to the kernel and also hardly satisfied because the system reserves resources for its own usage.

Architecture:

Concurrent approach.

Single process event-driven approach.

Concurrent approach

Handle multiple client requests at the same time. This architecture can be achieved by 3 way.

Multi Processing.

Multi Threading.

Hybrid.

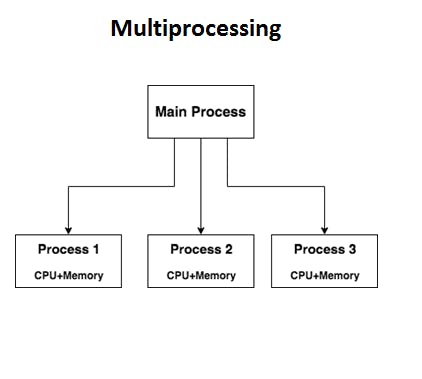

Multi-Processing Model

In this method, a single process launches several single-threaded child processes and distributes them to these child processes. Each of the child processes are responsible for handling single request.

multi processing

It can be drawn like this:

Parent Process:

- Child Process 1 (single request)

- Single thread 1

- Child Process 2

- Single thread 2

- Child Process 3

- Single thread 3

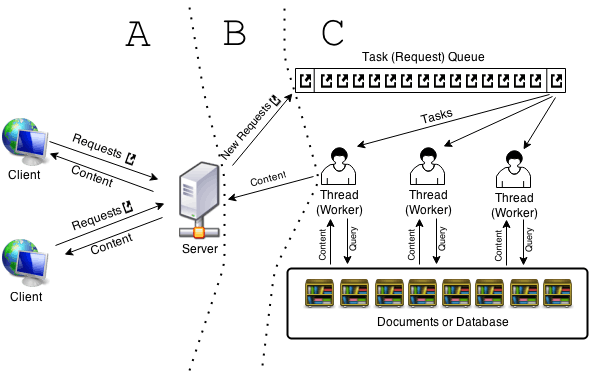

Multi-Threading Model:

Unlike multi-processing it creates multiple single-threaded process.

It can be drawn like this:

Request-

- Thread 1

- Thread 2

- Thread 3

N.B: On windows starting a new process is expensive but starting a new thread is nearly free although in Unix system thread and process both are nearly free.

Hybrid Model:

It does the combination of both processes. In this approach multiple processes are created and each process initiates multiple threads. Each thread handles one connection. Using multiple threads in a single process results into lesser load on system’s resources.

It can be drawn like this:

Process 1:

- Thread 1

- Thread 2

- ......

Process 2:

- Thread 3

- Thread 4

- ......

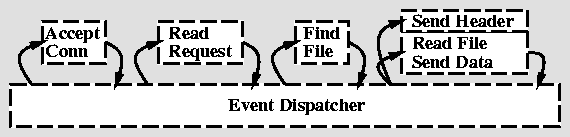

Single Process Event-Driven Approach:

The single-process event-driven (SPED) architecture uses a single event-driven server process to perform concurrent processing of multiple HTTP requests. The server uses non-blocking systems calls to perform asynchronous I/O operations. An operation like the BSD UNIX select or the System V poll is used to check for I/O operations that have been completed.

As an alternative to synchronous blocking I/O, the event-driven approach is also common in server architectures. A common model is the mapping of a single thread to multiple connections. The thread then handles all occurring events from I/O operations of these connections and requests.

A SPED server can be thought of as a state machine that performs one basic step associated with the serving of an HTTP request at a time, thus interleaving the processing steps associated with many HTTP requests. In each iteration, the server performs a select to check for completed I/O events (new connection arrivals, completed file operations, client sockets that have received data or have space in their send buffers.) When an I/O event is ready, it completes the corresponding basic step and initiates the next step associated with the HTTP request, if appropriate.

A good analogy would be it to compare to a coffee shop.